Sundays’s issue is free to non-subscribers. Consider becoming a paid subscriber to RMN to receive weekday newsletters.

Catastrophe Q&A: Model Maker

Temblor’s Ross Stein

Last week researchers from Temblor, Inc. and Tohoku University released research that suggests earthquake risk in many regions may be underestimated.

Risk Market News spoke Dr. Ross Stein, CEO of catastrophe modeling firm Temblor, about the implications of the research for the modeling industry and insurers.

Risk Market News: What made you decide to study this issue?

Ross Stein: Our interest began with the Ridgecrest events that occurred over a year ago, which included a magnitude 6.4 quake followed 34 hours later by a magnitude 7.1 quake. They struck on faults that were unknown and unmapped. That’s rather remarkable piece of unforeseen earthquake occurrence, given that California is one of the three most well mapped places on earth.

As a community, we were caught flatfooted. We hadn't foreseen the likelihood of such a large earthquake striking in an area without a large fault. That meant we better start asking ourselves what can we foresee now, in the wake of this quake?

RMN: Perhaps you could describe the new fault that was discovered.

Stein: It's important to realize that, particularly for the insurance industry, all risk earth-quake risk models are fault-based. The assumption is that we know all the faults on which large, damaging quakes can strike. The maximum magnitude of earthquakes on those faults are based by the fault lengths, or the lengths between bends and breaks on the fault if it's a very long fault. The occurrence rate of earthquakes is determined by the long-term slip rate of a fault. Areas off the fault are populated with something called ‘area sources.’

But events like the 1994 M 6.7 Northridge shocks, as well as large earthquakes else-where in the world over the last decade, have proved those assumptions wrong.

We've had earthquakes, like 2011 magnitude 9.0 quake in Tōhoku and New Zealand’s Magnitude 7.8 in 2016, which were much bigger than the model builders’ thought were possible on individual, smaller faults. And in Japan, California, and New Zealand — the three best map places in the world — we've had magnitude 7+ earthquakes strike on unknown faults.

So, however convenient assuming that we know the maximum size of an earthquake that could occur anywhere, it's frequency of occurrence (or ‘return period’), it’s wrong. We shouldn't be building models this way.

RMN: What is lacking? Is it an unknown that all science-based research faces or is it a failure of some sort of process?

Stein: That’s an important question.

The problem is that earthquakes occur about 10 miles down, but we're imprisoned on the surface of the earth. The surface trace of faults may, or may not be, representative of what's at depth. The continuity of faults at where quakes nucleate and propagate are often quite different than we see at the surface.

As an example, at Ridgecrest, there are a bunch of little ‘wannabe’ faults in the area, but nothing that was linked up. But it turns out that a few miles down they were all joined in a fault that was straight as an arrow. So, we were misled by the clues we have at the surface. It's an extremely difficult art. Our company, Temblor, shows faults in its app and hazard maps from 80 countries around the world. We believe that this information is extremely valuable despite the enormous difficulty in obtaining it, despite its incompleteness. But once we accept that it is incomplete, then we need to build models that are not solely dependent on it. That’s what we set out to do.

If we can't produce an adequate inventory of faults in Japan, California, New Zealand, we're never going to get them in the rest of the world. That's why Temblor builds its seismic risk models without basing them on faults. Instead, we use crustal strain, which is uniformly measured worldwide by GPS. Strain is a very good proxy for faults, be-cause you cannot have strain buildup without its release in earthquakes.

RMN: Let me ask you is about this specific research. What does a failure of the Ridge-crest fault practically mean?

Stein: Ridgecrest was a remarkable sequence that we can learn a great deal from. A magnitude 6.4 earthquake on basically an ‘L-shaped’ fault. Then, 34 hours later an ex-tension of one of those L features ruptured in a magnitude 7.1.

We can look retrospectively and say that that first earthquake stressed the site of the second, which promoted the second earthquake rupture. This is an important indication that stress transfer is fundamental to earthquake interactions: an earthquake n one fault can inhibit quakes on some faults, or promote them on others.

But then we see something else that’s just as interesting. The moment that 7.1 occurs, the other leg of the L’s aftershocks completely shut down. It stopped, because if fell under the “stress shadow” of the second shock. But what does that mean?

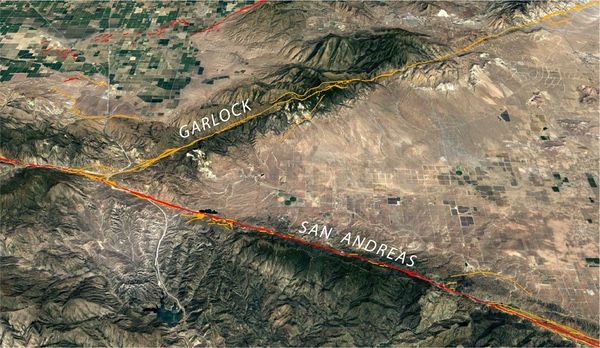

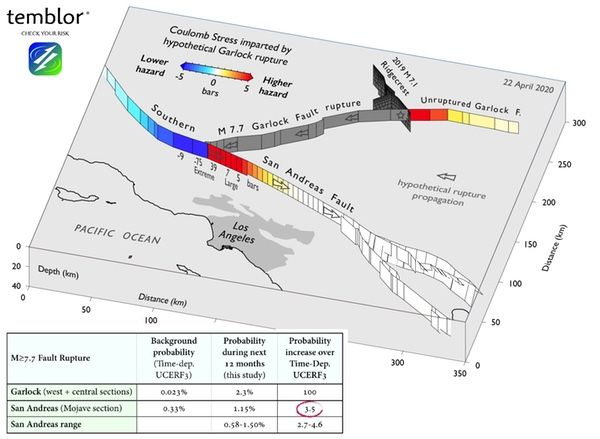

It means that if we can make these calculations as to how one big earthquake changes the conditions for failure on faults around it, we have something with forecast power. We may not have been able to predict the occurrence of this sequence as a whole. We didn't see the magnitude 6.4 coming, for example, except respectively. But now that the 6.4 and 7.1 have occurred, we can easily calculate how those events changed the conditions for failure on the surrounding faults. And that's what leads us to the conclusion that the Garlock Fault is now in play, because it's been stressed by both the 6.4 and the 7.1.

RMN: Can you estimate what the possible magnitude of a failure on the Garlock Fault and on the San Andreas Fault?

Stein: Great question, but it's in a very difficult one. What we can say is that when we make our forecast calculation based on this transfer of stress, we can see that if we add up all of the stress along the Garlock Fault that a magnitude 7.7 earthquake could fit in that region. For an earthquake of magnitude 7.7, its occurrence likelihood is a hundred times higher than what it was the year before the earthquake. But we don’t know what size a quake the Garlock could produce, except to say that smaller magnitudes are more likely.

But the Garlock Fault could be just a link in a chain, because the Garlock Fault connects the Ridgecrest Fault to the San Andreas. We calculate that a Garlock rupture that gets within 45 km (25 mi) of the San Andreas would increase the change of a San Andreas rupture by a factor of 150, bringing the chances of a San Andreas rupture in the next year to be about 1.15%, or 3.5-5.0 times higher than the reference USGS model. And this is the portion of the San Andreas closest to greater Los Angeles.

RMN: Are existing models used not only by research community and in the private market, really taking this research and this risk into account on the Garlock Fault?

Stein: My answer would be no. The current models out there are not dynamic, and they don't update when we have bigger earthquakes that change the conditions for failure. If we want to know how the hazard has changed, we need to account for that. And that's not being done either for Ridgecrest or many other areas.

RMN: Why is that not being done?

Stein: One of the things that you hear is that the market doesn't want sudden changes in risk. The market is relationship-driven and continuity in price and risk is as important as anything else. If a company feels that cannot re-price the risk, this risk is absorbed and essentially ignored.

While there are players in the market for whom continuity is more important than ac-curacy, there are others in the market for whom knowing what's going to happen next is valuable. It is valuable in a number of ways; whether or not a cat bond should be bought or sold, whether or not more or less reinsurance is needed, whether or not re-insurance is being properly priced, whether or not the company has the capital re-serves to meet a sudden change in its exposure.

Temblor’s position is that accumulation managers should be know the changed risk no matter what the company decides to do. The players who understands it can make the best moves because of it.

RMN: How does California’s efforts on earthquake resiliency figure into your expectations for damage given how your mode is anticipating losses?

Stein: In the last 20 and 30 years there's been a huge investment in infrastructure retrofit and a substantial investment in the retrofit of governmental buildings and public utilities, and refurbished bridges, freeways, and some dams, which is laudatory.

There's also an earthquake early warning system that has been developed for all of California. Under ideal circumstances it would give people about 10 seconds warning to drop, cover, and hold on. That will save lives in a scenario of a Garlock Fault rupture triggering a San Andreas rupture. That's also an enormously good news.

But there are holes in this fabric because individuals have done a rather poor job of protecting themselves. I'm talking about seismically retrofitting older homes, and insuring homes, so that people don't face the full-frontal loss of the value of damaged buildings. In many respects on a personal basis, we are woefully unprepared for what we all know to be coming.